Does ChatGPT change its persona when switching between languages?

#language_alsomatters

• 5 min read

A Czech proverb says "Learn a new language and get a new soul"; a Persian saying goes "A new language is a new life"; a Turkish one claims "One who speaks only one language is one person, but one who speaks two languages is two people." Meanwhile, many of those indeed able to speak several languages would easily confirm they do not feel (nor sound) the same in different languages.

Does the trick work for a large language model (LLM) like ChatGPT trained on a broad number of languages? Does the model change anything in the outputs depending on the language used for a prompt?

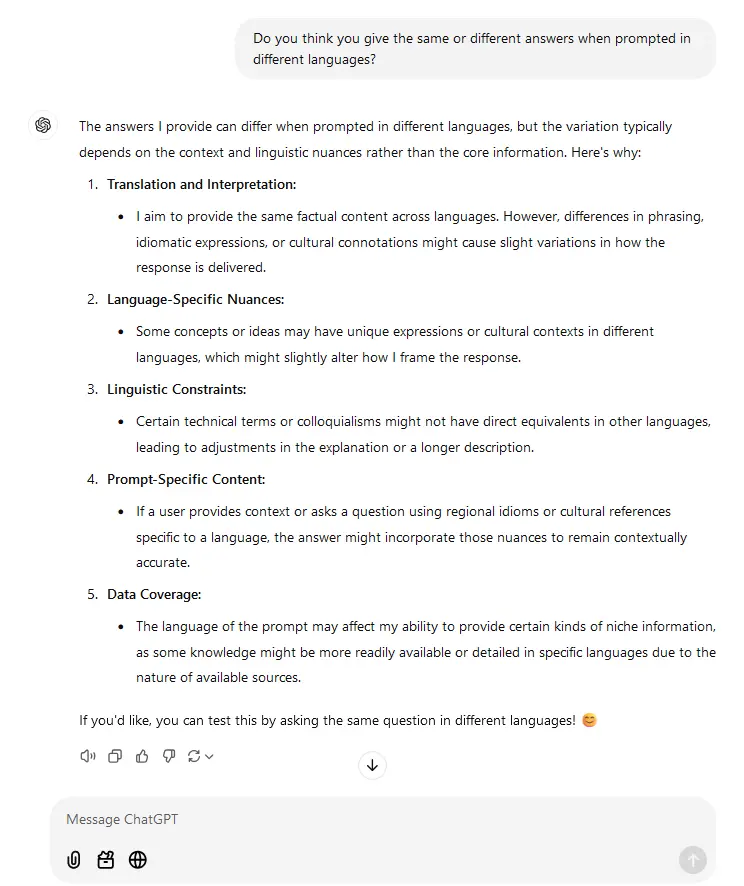

To begin with, we opened a new chat to directly ask ChatGPT about the approach it applies. We treated it as if it was a real person.

As the generated answer states, the main idea for the model is to keep content same no matter the language, unless regional or cultural peculiarities are included to the prompt. But it also admits that some information may be unavailable or less specified in certain languages and it can thus impact the output.

Since a LLM can only provide the data it was trained on, the claim must be especially valid when a term or a phenomenon only exists in one (or a few) languages – like enzuterre in Basque or jouska in English.

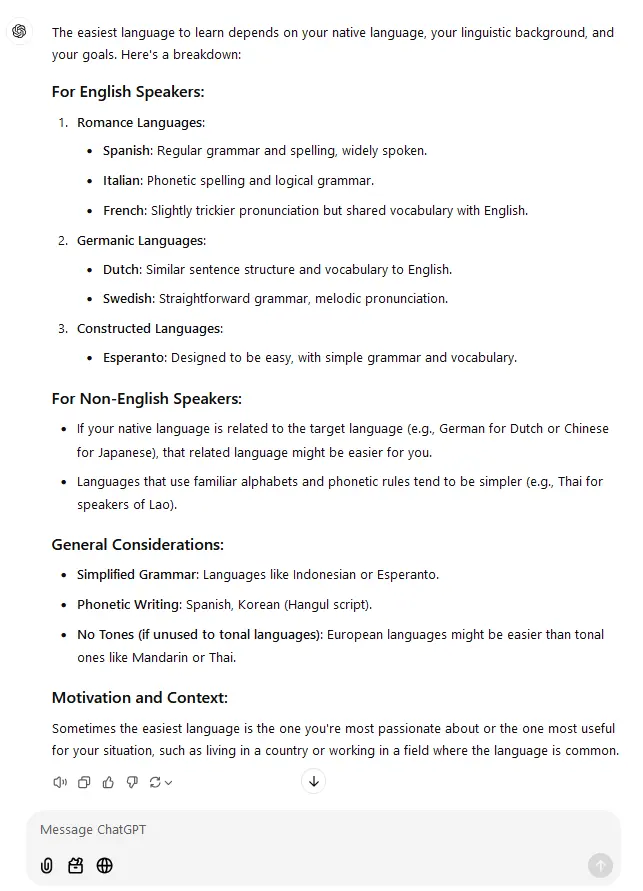

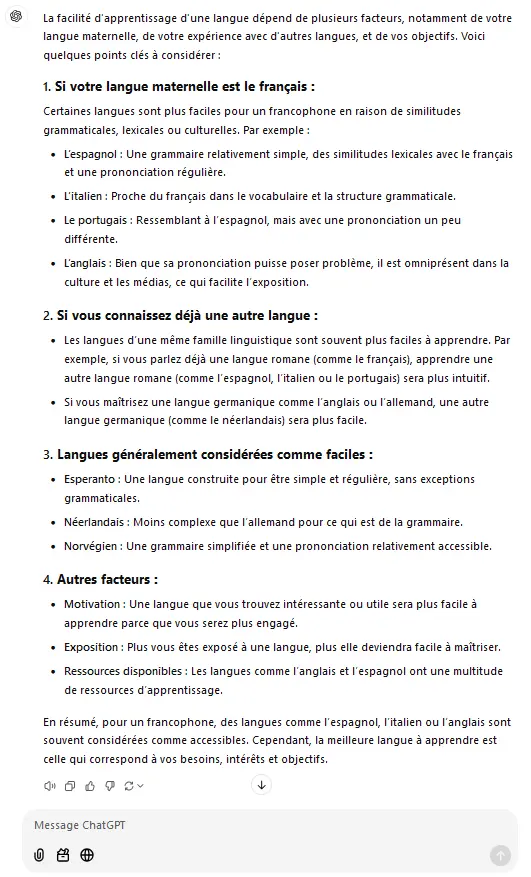

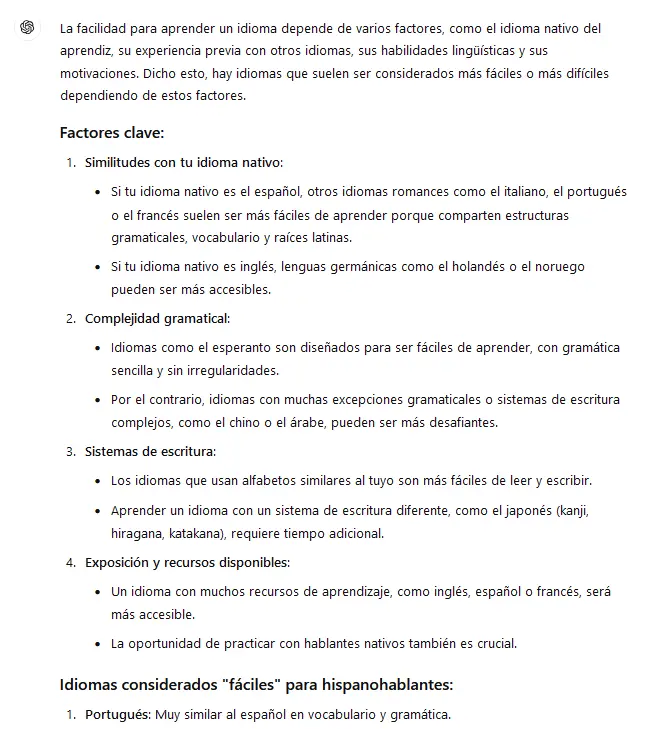

ChatGPT has encouraged us to test its performance by asking a question in different languages and we gladly accepted the invitation. What language is the easiest to learn? was the query which we put in English, French, Spanish, and Swedish. For each we had a separate chat. Below are the results.

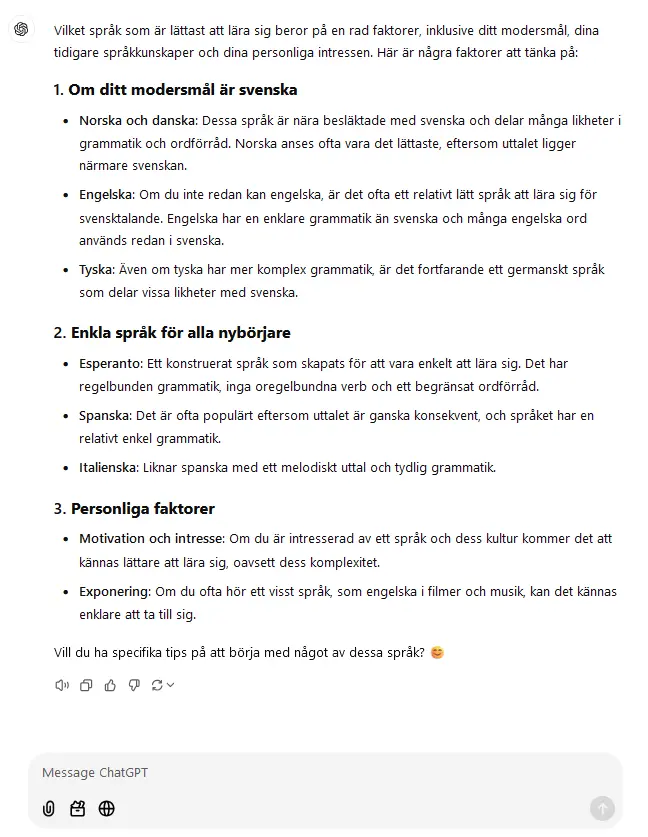

Prompts: 1 - What language is the easiest to learn? (Eng); 2 - Quelle langue est la plus facile à apprendre ? (Fr) ChatGPT, GPT-4o - Jan 04, 2025

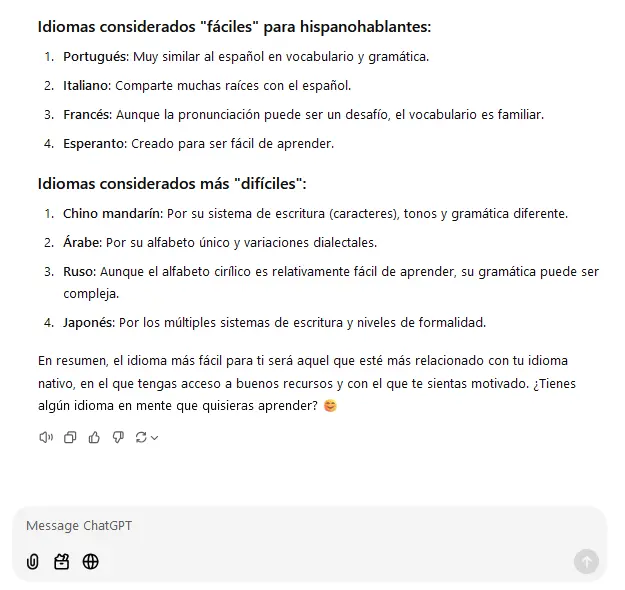

Prompt: ¿Qué idioma es más fácil de aprender? (Spa) ChatGPT, GPT-4o - Jan 04, 2025

Chief Editor

Chief Editor